“Time” is the most used noun in the English language, yet it remains a mystery. We’ve just completed an amazingly intense and rewarding multidisciplinary conference on the nature of time, and my brain is swimming with ideas and new questions. Rather than trying a summary (the talks will be online soon), here’s my stab at a top ten list partly inspired by our discussions: the things everyone should know about time. [Update: all of these are things I think are true, after quite a bit of deliberation. Not everyone agrees, although of course they should.]

1. Time exists. Might as well get this common question out of the way. Of course time exists — otherwise how would we set our alarm clocks? Time organizes the universe into an ordered series of moments, and thank goodness; what a mess it would be if reality were complete different from moment to moment. The real question is whether or not time is fundamental, or perhaps emergent. We used to think that “temperature” was a basic category of nature, but now we know it emerges from the motion of atoms. When it comes to whether time is fundamental, the answer is: nobody knows. My bet is “yes,” but we’ll need to understand quantum gravity much better before we can say for sure.

2. The past and future are equally real. This isn’t completely accepted, but it should be. Intuitively we think that the “now” is real, while the past is fixed and in the books, and the future hasn’t yet occurred. But physics teaches us something remarkable: every event in the past and future is implicit in the current moment. This is hard to see in our everyday lives, since we’re nowhere close to knowing everything about the universe at any moment, nor will we ever be — but the equations don’t lie. As Einstein put it, “It appears therefore more natural to think of physical reality as a four dimensional existence, instead of, as hitherto, the evolution of a three dimensional existence.”

3. Everyone experiences time differently. This is true at the level of both physics and biology. Within physics, we used to have Sir Isaac Newton’s view of time, which was universal and shared by everyone. But then Einstein came along and explained that how much time elapses for a person depends on how they travel through space (especially near the speed of light) as well as the gravitational field (especially if its near a black hole). From a biological or psychological perspective, the time measured by atomic clocks isn’t as important as the time measured by our internal rhythms and the accumulation of memories. That happens differently depending on who we are and what we are experiencing; there’s a real sense in which time moves more quickly when we’re older.

4. You live in the past. About 80 milliseconds in the past, to be precise. Use one hand to touch your nose, and the other to touch one of your feet, at exactly the same time. You will experience them as simultaneous acts. But that’s mysterious — clearly it takes more time for the signal to travel up your nerves from your feet to your brain than from your nose. The reconciliation is simple: our conscious experience takes time to assemble, and your brain waits for all the relevant input before it experiences the “now.” Experiments have shown that the lag between things happening and us experiencing them is about 80 milliseconds. (Via conference participant David Eagleman.)

5. Your memory isn’t as good as you think. When you remember an event in the past, your brain uses a very similar technique to imagining the future. The process is less like “replaying a video” than “putting on a play from a script.” If the script is wrong for whatever reason, you can have a false memory that is just as vivid as a true one. Eyewitness testimony, it turns out, is one of the least reliable forms of evidence allowed into courtrooms. (Via conference participants Kathleen McDermott and Henry Roediger.)

6. Consciousness depends on manipulating time. Many cognitive abilities are important for consciousness, and we don’t yet have a complete picture. But it’s clear that the ability to manipulate time and possibility is a crucial feature. In contrast to aquatic life, land-based animals, whose vision-based sensory field extends for hundreds of meters, have time to contemplate a variety of actions and pick the best one. The origin of grammar allowed us to talk about such hypothetical futures with each other. Consciousness wouldn’t be possible without the ability to imagine other times. (Via conference participant Malcolm MacIver.)

7. Disorder increases as time passes. At the heart of every difference between the past and future — memory, aging, causality, free will — is the fact that the universe is evolving from order to disorder. Entropy is increasing, as we physicists say. There are more ways to be disorderly (high entropy) than orderly (low entropy), so the increase of entropy seems natural. But to explain the lower entropy of past times we need to go all the way back to the Big Bang. We still haven’t answered the hard questions: why was entropy low near the Big Bang, and how does increasing entropy account for memory and causality and all the rest? (We heard great talks by David Albert and David Wallace, among others.)

8. Complexity comes and goes. Other than creationists, most people have no trouble appreciating the difference between “orderly” (low entropy) and “complex.” Entropy increases, but complexity is ephemeral; it increases and decreases in complex ways, unsurprisingly enough. Part of the “job” of complex structures is to increase entropy, e.g. in the origin of life. But we’re far from having a complete understanding of this crucial phenomenon. (Talks by Mike Russell, Richard Lenski, Raissa D’Souza.)

9. Aging can be reversed. We all grow old, part of the general trend toward growing disorder. But it’s only the universe as a whole that must increase in entropy, not every individual piece of it. (Otherwise it would be impossible to build a refrigerator.) Reversing the arrow of time for living organisms is a technological challenge, not a physical impossibility. And we’re making progress on a few fronts: stem cells, yeast, and even (with caveats) mice and human muscle tissue. As one biologist told me: “You and I won’t live forever. But as for our grandkids, I’m not placing any bets.”

10. A lifespan is a billion heartbeats. Complex organisms die. Sad though it is in individual cases, it’s a necessary part of the bigger picture; life pushes out the old to make way for the new. Remarkably, there exist simple scaling laws relating animal metabolism to body mass. Larger animals live longer; but they also metabolize slower, as manifested in slower heart rates. These effects cancel out, so that animals from shrews to blue whales have lifespans with just about equal number of heartbeats — about one and a half billion, if you simply must be precise. In that very real sense, all animal species experience “the same amount of time.” At least, until we master #9 and become immortal. (Amazing talk by Geoffrey West.)

20111207

20111026

14 More Wonderful Words With No English Equivalent

1. Shemomedjamo (Georgian)

You know when you’re really full, but your meal is just so delicious, you can’t stop eating it? The Georgians feel your pain. This word means, “I accidentally ate the whole thing.”

2. Pelinti (Buli, Ghana)

Your friend bites into a piece of piping hot pizza, then opens his mouth and sort of tilts his head around while making an “aaaarrrahh” noise. The Ghanaians have a word for that. More specifically, it means “to move hot food around in your mouth.”

3. Layogenic (Tagalog)

Remember in Clueless when Cher describes someone as “a full-on Monet…from far away, it’s OK, but up close it’s a big old mess”? That’s exactly what this word means.

4. Rhwe (Tsonga, South Africa)

College kids, relax. There’s actually a word for “to sleep on the floor without a mat, while drunk and naked.”

5. Zeg (Georgian)

It means “the day after tomorrow.” Seriously, why don’t we have a word for that in English?

6. Pålegg (Norweigian)

Sandwich Artists unite! The Norwegians have a non-specific descriptor for anything – ham, cheese, jam, Nutella, mustard, herring, pickles, Doritos, you name it – you might consider putting into a sandwich.

7. Lagom (Swedish)

Maybe Goldilocks was Swedish? This slippery little word is hard to define, but means something like, “Not too much, and not too little, but juuuuust right.”

8. Tartle (Scots)

The nearly onomatopoeic word for that panicky hesitation just before you have to introduce someone whose name you can’t quite remember.

9. Koi No Yokan (Japanese)

The sense upon first meeting a person that the two of you are going to fall into love.

10. Mamihlapinatapai (Yaghan language of Tierra del Fuego)

This word captures that special look shared between two people, when both are wishing that the other would do something that they both want, but neither want to do.

11. Fremdschämen (German); Myötähäpeä (Finnish)

The kindler, gentler cousins of Schadenfreude, both these words mean something akin to “vicarious embarrassment.” Or, in other words, that-feeling-you-get-when-you-watch-Meet the Parents.

12. Cafune (Brazilian Portuguese)

Leave it to the Brazilians to come up with a word for “tenderly running your fingers through your lover’s hair.”

13. Greng-jai (Thai)

That feeling you get when you don’t want someone to do something for you because it would be a pain for them.

14. Kaelling (Danish)

You know that woman who stands on her doorstep (or in line at the supermarket, or at the park, or in a restaurant) cursing at her children? The Danes know her, too.

You know when you’re really full, but your meal is just so delicious, you can’t stop eating it? The Georgians feel your pain. This word means, “I accidentally ate the whole thing.”

2. Pelinti (Buli, Ghana)

Your friend bites into a piece of piping hot pizza, then opens his mouth and sort of tilts his head around while making an “aaaarrrahh” noise. The Ghanaians have a word for that. More specifically, it means “to move hot food around in your mouth.”

3. Layogenic (Tagalog)

Remember in Clueless when Cher describes someone as “a full-on Monet…from far away, it’s OK, but up close it’s a big old mess”? That’s exactly what this word means.

4. Rhwe (Tsonga, South Africa)

College kids, relax. There’s actually a word for “to sleep on the floor without a mat, while drunk and naked.”

5. Zeg (Georgian)

It means “the day after tomorrow.” Seriously, why don’t we have a word for that in English?

6. Pålegg (Norweigian)

Sandwich Artists unite! The Norwegians have a non-specific descriptor for anything – ham, cheese, jam, Nutella, mustard, herring, pickles, Doritos, you name it – you might consider putting into a sandwich.

7. Lagom (Swedish)

Maybe Goldilocks was Swedish? This slippery little word is hard to define, but means something like, “Not too much, and not too little, but juuuuust right.”

8. Tartle (Scots)

The nearly onomatopoeic word for that panicky hesitation just before you have to introduce someone whose name you can’t quite remember.

9. Koi No Yokan (Japanese)

The sense upon first meeting a person that the two of you are going to fall into love.

10. Mamihlapinatapai (Yaghan language of Tierra del Fuego)

This word captures that special look shared between two people, when both are wishing that the other would do something that they both want, but neither want to do.

11. Fremdschämen (German); Myötähäpeä (Finnish)

The kindler, gentler cousins of Schadenfreude, both these words mean something akin to “vicarious embarrassment.” Or, in other words, that-feeling-you-get-when-you-watch-Meet the Parents.

12. Cafune (Brazilian Portuguese)

Leave it to the Brazilians to come up with a word for “tenderly running your fingers through your lover’s hair.”

13. Greng-jai (Thai)

That feeling you get when you don’t want someone to do something for you because it would be a pain for them.

14. Kaelling (Danish)

You know that woman who stands on her doorstep (or in line at the supermarket, or at the park, or in a restaurant) cursing at her children? The Danes know her, too.

20111019

Ingestion / Planet in a Bottle

Christopher Turner

“Ingestion” is a column that explores food within a framework informed by aesthetics, history, and philosophy.

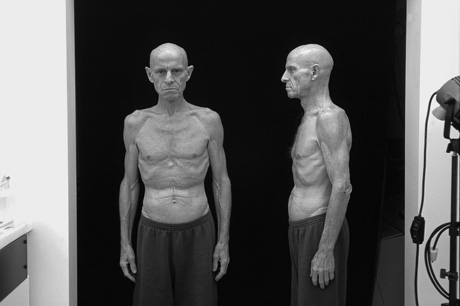

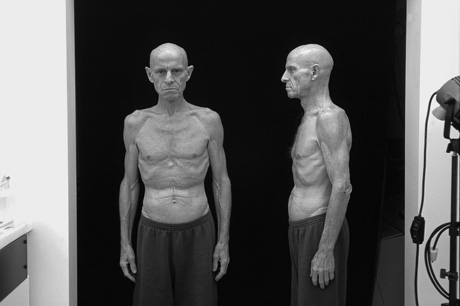

Self-portrait by Dr. Roy Walford illustrating the startling effects of his CRON-diet. Courtesy the Roy L. Walford Trust.

At 8:15 am on 26 September 1991, eight “bionauts,” as they called themselves, wearing identical red Star Trek–like jumpsuits (made for them by Marilyn Monroe’s former dressmaker) waved to the assembled crowd and climbed through an airlock door in the Arizona desert. They shut it behind them and opened another that led into a series of hermetically sealed greenhouses in which they would live for the next two years. The three-acre complex of interconnected glass Mesoamerican pyramids, geodesic domes, and vaulted structures contained a tropical rain forest, a grassland savannah, a mangrove wetland, a farm, and a salt-water ocean with a wave machine and gravelly beach. This was Biosphere 2—the first biosphere being Earth—a $150 million experiment designed to see if, in a climate of nuclear and ecological fear, the colonization of space might be possible. The project was described in the press as a “planet in a bottle,” “Eden revisited,” and “Greenhouse Ark.”

Before entering, the Biosphere’s pioneer inhabitants had enjoyed a final, hearty breakfast consisting of ham, eggs, and buttered bread, but from here on, they would be self-sufficient—everything they ate would be grown, processed, and prepared in their airtight bubble. A few years before designing the Biosphere, architect Phil Hawes had proposed a space city 110 feet in diameter, a flying doughnut that would spin to create its own gravity and in which miniature animals could be kept and plants cultivated, along with a store of cryogenically frozen seed for the propagation of twenty thousand other species. The space frame of the Biosphere, a terrestrial version of such a sci-fi fantasy, had been built by an engineer who had worked with Buckminster Fuller, author of Operating Manual for Spaceship Earth, which compared the planet to a spaceship flying through the universe with finite resources that could never be resupplied. The Biosphere was intended as a similar symbol of our ecological plight.

The project caught the national imagination. Discover, the popular science magazine, declared the mission “the most exciting venture to be undertaken in the US since President Kennedy launched us towards the moon.” Tourists came by the busload to peer through the glass at the bionauts, trapped in their vivarium like laboratory rats (the project was an acknowledged precursor to the Big Brother reality-TV show). Over the first six months, 159,000 people visited, including William S. Burroughs and Timothy Leary.

Life inside the glass city was colored by its inventors’ countercultural idealism. They had all met on the Synergia Ranch, a commune they founded near Santa Fe, New Mexico, in the late 1960s and ran according to Wilhelm Reich’s idea of “work democracy”; they practiced improvisational theatre and made a fortune building developments of adobe condos, which helped pay for the Biosphere (the commune is described in Laurence Veysey’s 1971 ethnography, The Communal Experience: Anarchist and Mystical Communities in Twentieth-Century America). The Biosphere’s half-acre arable plot had been cultivated for three months in preparation for the crew’s arrival to what was supposed to be a high-tech Eden. However, the members of the chosen team lacked experience as farmers and, despite reading how-to manuals with titles such as How to Grow More Vegetables than You Ever Thought Possible on Less Land than You Can Imagine, yields were disappointing and they began to starve.

The bionauts had to perform hard physical labor to produce their food, but there was only enough for them to consume a measly 1750 calories a day, and they found it difficult to sustain such active lives. On a diet of beans, porridge, beets, carrots, and sweet potatoes, their weight plummeted and their skin began to go orange as a result of the excess beta-carotene in their diet. “It was very stressful, especially with a crew like that,” recalled Sally Silverstone, the Agriculture and Food Systems Manager, “essentially white middle-class, upper-middle-class Western individuals who had never been short of food in their whole life—it was a tremendous shock.”

Silverstone would weigh out the day’s allotment of fresh food for whoever’s turn it was to cook, entering into a computer database the amount of nutrients to check that the crew was keeping above the recommended intake levels of calories, proteins, and fats. At first the meals were served buffet style but, as the crew got hungrier, the cooks scrupulously divided their offerings into equal portions. Leaving every meal still hungry, all the bionauts could think about was food, and their memoirs of the two-year project are full of references to their recurring dreams of McDonald’s hamburgers, lobster, sushi, Snickers-bar cheesecake, lox and bagels, croissants, and whiskey. They bartered most of their possessions, but food was too precious to trade. They became sluggish and irritable through lack of it, and were driven by hunger to acts of sabotage. Bananas were stolen from the basement storeroom; the freezer had to be locked.

The medic who presided over the team’s health was Dr. Roy Walford, a professor of pathology at UCLA Medical School who had served in the Korean War and, at sixty-nine, was the oldest member of the crew. He was a gerontologist and specialist in life extension who, in studies with mice, thought that he’d successfully shown that one could live longer by eating less; his skinny mice outlived his fat ones by as much as forty percent. In his books Maximum Life Span (1983) and The 120-Year Diet (1986), Walford promised that “calorie restriction with optimal nutrition, which I call the ‘CRON-diet,’ will retard your rate of aging, extend lifespan (up to perhaps 150 to 160 years, depending on when you start and how thoroughly you hold to it), and markedly decrease susceptibility to most major diseases.”

The disappointing crop yields in the Biosphere allowed Walford to experiment with his “healthy starvation diet” on humans in unprecedented laboratory conditions. While his subjects pleaded with mission control for more supplies, Walford—who had been on the CRON-diet for years—maintained that their daily calorie intake was sufficient. “I think if there had been any other nutritionist or physician, they would have freaked out and said, ‘We’re starving,’” Walford said, “but I knew we were actually on a program of health enhancement.” Every two weeks he would give them all a full medical checkup. He discovered that their blood pressure, blood sugar, and cholesterol counts did indeed drop to healthier levels—which he presumed would retard aging and extend maximal lifespan as it seemed to in mice—though an unanticipated side effect of this was that their blood was awash with the toxins that had been stored in their rapidly dissolving body fat.

Group photo of candidates for Biosphere 2, 1989. Only six of the fourteen individuals shown were ultimately chosen to live in the biosphere, along with two others not present. A trim Roy Walford is in the back row sporting a moustache.

In their 1993 book Life Under Glass: The Inside Story of Biosphere 2, crew members Abigail Alling and Mark Nelson note: “Each biospherian responded differently to the diet. Initially, over the first six months or so, we lost between eighteen and fifty-eight pounds each. ... Roy continued to assure us not to worry when we commented on our baggy pants and loose shirts because our overall health was actually improved by the combination of our diet and the superb freshness and quality of the organically grown food.” They acknowledged that their natural diet was incredibly healthy: “The only problem was that there never seemed to be enough of it.” Every month Walford took a Polaroid photo of each member that recorded their dramatic physiological changes—men lost eighteen percent of their body weight, women ten percent, mostly within the first six months.

During her two years inside, Silverstone wrote a cookbook with the fantastic title, Eating In: From the Field to the Kitchen in Biosphere 2, which contains forty-eight recipes from the biospherian diet, along with the story of how the crew planted, grew, processed, and prepared the food on their half-acre farm. In the book, the team are photographed a year into the mission, standing behind a table loaded with food, but they all look gaunt and bony. By this time, the team had splintered into two opposing factions that barely spoke to each other, but they still ate together (a favorite dinner spot was the balcony overlooking the Intensive Agriculture Biome, from which you could see the sun setting over the Catalina mountains and which they dubbed the Café Visionaire). They stockpiled their supplies for such feasts, sacrificing livestock and saving milk, eggs, figs, and papayas, and lubricating the meals with homebrew or punch spiked with alcohol from Walford’s medical supplies. The menu for their first Thanksgiving feast was:

Baked chicken with water chestnut stuffing

Sautéed ginger beets

Baked sweet potatoes

Stuffed chili peppers sautéed with goat cheese

Tossed salad with a lemon-yogurt dressing

Orange banana bread

Tibetan rice beer (chung)

Sweet potato pie topped with yogurt

Cheesecake

Coffee with steamed goat’s milk

The rest of the time they were so famished they chewed on peanut shells, coffee grounds, and banana skins. Fennel leaves became known as “biospherian chewing gum.”

When the crew emerged from the experiment after two years, the project was judged by the media to be a failure. Early in the second year, carbon dioxide levels had risen so high (twelve times that of the outside) that the crew were growing faint and Walford asked for more oxygen to be pumped into the structure on two occasions. The Biosphere had proved not to be a self-sufficient, autonomous world as it would need to be if it were to become a base station on another planet. In 1999, by which time the Biosphere had been taken over by Columbia University as a research center into the effects of climate change,Time magazine judged it one of the hundred worst ideas of the twentieth century. But Walford’s experiments with diet, reported in the Proceedings of the National Academy of Sciences in December 1992, were judged a success. The bionauts not only showed dramatic weight loss (stabilizing at an average of fourteen percent overall), but also lower blood pressure and cholesterol, more efficient metabolisms, and enhanced immune systems.

The year after his release, Walford began the Calorie Restriction Society, which now has seven thousand members (it is estimated that one hundred thousand practice the diet worldwide). The CRONIES, as Walford’s devotees refer to themselves, measure and weigh out everything they consume using electronic scales, the idea being that each calorie you consume should contain as many nutrients as possible (you can download Cron-O-Meter software free on the internet to help plan such a diet). The CRONIES live at the border of self-starvation, in the hope of delayed gratification; like their mentor, they live in their own biosphere, a self-absorbed bubble of discipline and resolve, and maintain the science-fiction dream of an age when we will all be able to live forever. Calorie restriction failed to dramatically increase Walford’s lifespan, however: he died in 2004 of Lou Gehrig’s disease, aged seventy-nine.

“Ingestion” is a column that explores food within a framework informed by aesthetics, history, and philosophy.

Self-portrait by Dr. Roy Walford illustrating the startling effects of his CRON-diet. Courtesy the Roy L. Walford Trust.

At 8:15 am on 26 September 1991, eight “bionauts,” as they called themselves, wearing identical red Star Trek–like jumpsuits (made for them by Marilyn Monroe’s former dressmaker) waved to the assembled crowd and climbed through an airlock door in the Arizona desert. They shut it behind them and opened another that led into a series of hermetically sealed greenhouses in which they would live for the next two years. The three-acre complex of interconnected glass Mesoamerican pyramids, geodesic domes, and vaulted structures contained a tropical rain forest, a grassland savannah, a mangrove wetland, a farm, and a salt-water ocean with a wave machine and gravelly beach. This was Biosphere 2—the first biosphere being Earth—a $150 million experiment designed to see if, in a climate of nuclear and ecological fear, the colonization of space might be possible. The project was described in the press as a “planet in a bottle,” “Eden revisited,” and “Greenhouse Ark.”

Before entering, the Biosphere’s pioneer inhabitants had enjoyed a final, hearty breakfast consisting of ham, eggs, and buttered bread, but from here on, they would be self-sufficient—everything they ate would be grown, processed, and prepared in their airtight bubble. A few years before designing the Biosphere, architect Phil Hawes had proposed a space city 110 feet in diameter, a flying doughnut that would spin to create its own gravity and in which miniature animals could be kept and plants cultivated, along with a store of cryogenically frozen seed for the propagation of twenty thousand other species. The space frame of the Biosphere, a terrestrial version of such a sci-fi fantasy, had been built by an engineer who had worked with Buckminster Fuller, author of Operating Manual for Spaceship Earth, which compared the planet to a spaceship flying through the universe with finite resources that could never be resupplied. The Biosphere was intended as a similar symbol of our ecological plight.

The project caught the national imagination. Discover, the popular science magazine, declared the mission “the most exciting venture to be undertaken in the US since President Kennedy launched us towards the moon.” Tourists came by the busload to peer through the glass at the bionauts, trapped in their vivarium like laboratory rats (the project was an acknowledged precursor to the Big Brother reality-TV show). Over the first six months, 159,000 people visited, including William S. Burroughs and Timothy Leary.

Life inside the glass city was colored by its inventors’ countercultural idealism. They had all met on the Synergia Ranch, a commune they founded near Santa Fe, New Mexico, in the late 1960s and ran according to Wilhelm Reich’s idea of “work democracy”; they practiced improvisational theatre and made a fortune building developments of adobe condos, which helped pay for the Biosphere (the commune is described in Laurence Veysey’s 1971 ethnography, The Communal Experience: Anarchist and Mystical Communities in Twentieth-Century America). The Biosphere’s half-acre arable plot had been cultivated for three months in preparation for the crew’s arrival to what was supposed to be a high-tech Eden. However, the members of the chosen team lacked experience as farmers and, despite reading how-to manuals with titles such as How to Grow More Vegetables than You Ever Thought Possible on Less Land than You Can Imagine, yields were disappointing and they began to starve.

The bionauts had to perform hard physical labor to produce their food, but there was only enough for them to consume a measly 1750 calories a day, and they found it difficult to sustain such active lives. On a diet of beans, porridge, beets, carrots, and sweet potatoes, their weight plummeted and their skin began to go orange as a result of the excess beta-carotene in their diet. “It was very stressful, especially with a crew like that,” recalled Sally Silverstone, the Agriculture and Food Systems Manager, “essentially white middle-class, upper-middle-class Western individuals who had never been short of food in their whole life—it was a tremendous shock.”

Silverstone would weigh out the day’s allotment of fresh food for whoever’s turn it was to cook, entering into a computer database the amount of nutrients to check that the crew was keeping above the recommended intake levels of calories, proteins, and fats. At first the meals were served buffet style but, as the crew got hungrier, the cooks scrupulously divided their offerings into equal portions. Leaving every meal still hungry, all the bionauts could think about was food, and their memoirs of the two-year project are full of references to their recurring dreams of McDonald’s hamburgers, lobster, sushi, Snickers-bar cheesecake, lox and bagels, croissants, and whiskey. They bartered most of their possessions, but food was too precious to trade. They became sluggish and irritable through lack of it, and were driven by hunger to acts of sabotage. Bananas were stolen from the basement storeroom; the freezer had to be locked.

The medic who presided over the team’s health was Dr. Roy Walford, a professor of pathology at UCLA Medical School who had served in the Korean War and, at sixty-nine, was the oldest member of the crew. He was a gerontologist and specialist in life extension who, in studies with mice, thought that he’d successfully shown that one could live longer by eating less; his skinny mice outlived his fat ones by as much as forty percent. In his books Maximum Life Span (1983) and The 120-Year Diet (1986), Walford promised that “calorie restriction with optimal nutrition, which I call the ‘CRON-diet,’ will retard your rate of aging, extend lifespan (up to perhaps 150 to 160 years, depending on when you start and how thoroughly you hold to it), and markedly decrease susceptibility to most major diseases.”

The disappointing crop yields in the Biosphere allowed Walford to experiment with his “healthy starvation diet” on humans in unprecedented laboratory conditions. While his subjects pleaded with mission control for more supplies, Walford—who had been on the CRON-diet for years—maintained that their daily calorie intake was sufficient. “I think if there had been any other nutritionist or physician, they would have freaked out and said, ‘We’re starving,’” Walford said, “but I knew we were actually on a program of health enhancement.” Every two weeks he would give them all a full medical checkup. He discovered that their blood pressure, blood sugar, and cholesterol counts did indeed drop to healthier levels—which he presumed would retard aging and extend maximal lifespan as it seemed to in mice—though an unanticipated side effect of this was that their blood was awash with the toxins that had been stored in their rapidly dissolving body fat.

Group photo of candidates for Biosphere 2, 1989. Only six of the fourteen individuals shown were ultimately chosen to live in the biosphere, along with two others not present. A trim Roy Walford is in the back row sporting a moustache.

In their 1993 book Life Under Glass: The Inside Story of Biosphere 2, crew members Abigail Alling and Mark Nelson note: “Each biospherian responded differently to the diet. Initially, over the first six months or so, we lost between eighteen and fifty-eight pounds each. ... Roy continued to assure us not to worry when we commented on our baggy pants and loose shirts because our overall health was actually improved by the combination of our diet and the superb freshness and quality of the organically grown food.” They acknowledged that their natural diet was incredibly healthy: “The only problem was that there never seemed to be enough of it.” Every month Walford took a Polaroid photo of each member that recorded their dramatic physiological changes—men lost eighteen percent of their body weight, women ten percent, mostly within the first six months.

During her two years inside, Silverstone wrote a cookbook with the fantastic title, Eating In: From the Field to the Kitchen in Biosphere 2, which contains forty-eight recipes from the biospherian diet, along with the story of how the crew planted, grew, processed, and prepared the food on their half-acre farm. In the book, the team are photographed a year into the mission, standing behind a table loaded with food, but they all look gaunt and bony. By this time, the team had splintered into two opposing factions that barely spoke to each other, but they still ate together (a favorite dinner spot was the balcony overlooking the Intensive Agriculture Biome, from which you could see the sun setting over the Catalina mountains and which they dubbed the Café Visionaire). They stockpiled their supplies for such feasts, sacrificing livestock and saving milk, eggs, figs, and papayas, and lubricating the meals with homebrew or punch spiked with alcohol from Walford’s medical supplies. The menu for their first Thanksgiving feast was:

Baked chicken with water chestnut stuffing

Sautéed ginger beets

Baked sweet potatoes

Stuffed chili peppers sautéed with goat cheese

Tossed salad with a lemon-yogurt dressing

Orange banana bread

Tibetan rice beer (chung)

Sweet potato pie topped with yogurt

Cheesecake

Coffee with steamed goat’s milk

The rest of the time they were so famished they chewed on peanut shells, coffee grounds, and banana skins. Fennel leaves became known as “biospherian chewing gum.”

When the crew emerged from the experiment after two years, the project was judged by the media to be a failure. Early in the second year, carbon dioxide levels had risen so high (twelve times that of the outside) that the crew were growing faint and Walford asked for more oxygen to be pumped into the structure on two occasions. The Biosphere had proved not to be a self-sufficient, autonomous world as it would need to be if it were to become a base station on another planet. In 1999, by which time the Biosphere had been taken over by Columbia University as a research center into the effects of climate change,Time magazine judged it one of the hundred worst ideas of the twentieth century. But Walford’s experiments with diet, reported in the Proceedings of the National Academy of Sciences in December 1992, were judged a success. The bionauts not only showed dramatic weight loss (stabilizing at an average of fourteen percent overall), but also lower blood pressure and cholesterol, more efficient metabolisms, and enhanced immune systems.

The year after his release, Walford began the Calorie Restriction Society, which now has seven thousand members (it is estimated that one hundred thousand practice the diet worldwide). The CRONIES, as Walford’s devotees refer to themselves, measure and weigh out everything they consume using electronic scales, the idea being that each calorie you consume should contain as many nutrients as possible (you can download Cron-O-Meter software free on the internet to help plan such a diet). The CRONIES live at the border of self-starvation, in the hope of delayed gratification; like their mentor, they live in their own biosphere, a self-absorbed bubble of discipline and resolve, and maintain the science-fiction dream of an age when we will all be able to live forever. Calorie restriction failed to dramatically increase Walford’s lifespan, however: he died in 2004 of Lou Gehrig’s disease, aged seventy-nine.

Willat Effect Experiments With Tea

The Willat Effect is the hedonic change caused by side-by-side comparison of similar things. Your hedonic response to the things compared (e.g., two or more dark chocolates) expands in both directions. The “better” things become more pleasant and the “worse” things become less pleasant. In my experience, it’s a big change, easy to notice.

I discovered the Willat Effect when my friend Carl Willat offered me five different limoncellos side by side. Knowing that he likes it, his friends had given them to him. Perhaps three were homemade, two store-bought. I’d had plenty of limoncello before that, but always one version at a time. Within seconds of tasting the five versions side by side, I came to like two of them (with more complex flavors) more than the rest. One or two of them I started to dislike. When you put two similar things next to each other, of course you see their differences more clearly. What’s impressive is the hedonic change.

The Willat Effect supports my ideas about human evolution because it pushes people toward connoisseurship. (I predict it won’t occur with animals.) The fact that repeating elements are found in so many decorating schemes and patterns meant to be pretty (e.g., wallpapers, textile patterns, rugs, choreography) suggests that we get pleasure from putting similar things side by side — the very state that produces the Willat Effect. According to my theory of human evolution, connoisseurship evolved because it created demand for hard-to-make goods, which helped the most skilled artisans make a living. Carl’s limoncello tasting made me a mini-connoisseur of limoncello. I started buying it much more often and bought more expensive brands, thus helping the best limoncello makers make a living. Connoisseurs turn surplus into innovation by giving the most skilled artisans more time and freedom to innovate.

Does the Willat Effect have practical value? Could it improve my life? Recently I decided to see if it could make me a green tea connoisseur. Ever since I discovered the Shangri-La Diet (calories without smell), I’d been drinking tea (smell without calories) almost daily but I was no connoisseur. Nor had I done many side-by-side comparisons. At home, I had always made one cup at a time.

In Beijing, where I am now, I can easily buy many green teas. I got three identical tea pots (SAMA SAG-08) and three cheap green teas. I drink tea every morning. Instead of brewing one pot, I started making two or three pots at the same time and comparing the results. I compared different teas and the same tea brewed different lengths of time (Carl’s idea).

I’ve been doing this about two weeks. The results so far:

1. The cheapest tea became undrinkable. I decided to never buy it again and not to drink the rest of my purchase. I will use it for kombucha. Two of the three teas cost about twice the cheapest one. After a few side by side comparisons I liked the more expensive ones considerably more than the cheaper one. The two more expensive ones cost about the same but, weirdly, I liked the one that cost (slightly) more a little better than the one that cost less. (Tea is sold in bulk with no packaging or branding so the price I pay is closely related to what the grower was paid. The buyers taste it and decide what it’s worth.)

2. I decided to infuse the tea leaves only once. (Usual practice is to infuse green tea two or more times.) The quality of later infusions was too low, I decided. Before this, I had found second and later infusions had been acceptable.

The Willat Effect is working, in other words. After a decade of drinking tea, my practices suddenly changed. I will buy different teas and brew them differently. I will spend a lot more per cup since (a) each cup will require fresh tea, (b) I won’t buy the cheapest tea, and (c) I have become far more interested in green tea, partly because each cup tastes better, partly because I am curious if more expensive varieties taste better. When I bought the three varieties I have now I didn’t bother to learn their names; I identified them by price. In the future I will learn the names.

To get the Willat Effect, the things being compared must be quite similar. For example, comparing green tea with black tea does nothing. I have learned a methodological lesson: That tea is a great medium for studying this not only because it’s cheap but also because you can easily get similar tasting teas by brewing the same tea different lengths of time. I haven’t yet tried different water temperatures but that too might work.

I have done similar things before. I bought several versions of orange marmalade, did side-by-side tastings, and indeed became an orange marmalade connoisseur. After that I bought only expensive versions. After a few side-by-side comparisons of cheese that included expensive cheeses, I stopped buying cheap cheese. You could say I am still an orange marmalade and cheese connoisseur but this has no effect on my current life. Because I avoid sugar, I don’t eat orange marmalade. Because of all the butter I eat, I rarely eat cheese. My budding green tea connoisseurship, however, is making a difference because I drink tea every day.

I discovered the Willat Effect when my friend Carl Willat offered me five different limoncellos side by side. Knowing that he likes it, his friends had given them to him. Perhaps three were homemade, two store-bought. I’d had plenty of limoncello before that, but always one version at a time. Within seconds of tasting the five versions side by side, I came to like two of them (with more complex flavors) more than the rest. One or two of them I started to dislike. When you put two similar things next to each other, of course you see their differences more clearly. What’s impressive is the hedonic change.

The Willat Effect supports my ideas about human evolution because it pushes people toward connoisseurship. (I predict it won’t occur with animals.) The fact that repeating elements are found in so many decorating schemes and patterns meant to be pretty (e.g., wallpapers, textile patterns, rugs, choreography) suggests that we get pleasure from putting similar things side by side — the very state that produces the Willat Effect. According to my theory of human evolution, connoisseurship evolved because it created demand for hard-to-make goods, which helped the most skilled artisans make a living. Carl’s limoncello tasting made me a mini-connoisseur of limoncello. I started buying it much more often and bought more expensive brands, thus helping the best limoncello makers make a living. Connoisseurs turn surplus into innovation by giving the most skilled artisans more time and freedom to innovate.

Does the Willat Effect have practical value? Could it improve my life? Recently I decided to see if it could make me a green tea connoisseur. Ever since I discovered the Shangri-La Diet (calories without smell), I’d been drinking tea (smell without calories) almost daily but I was no connoisseur. Nor had I done many side-by-side comparisons. At home, I had always made one cup at a time.

In Beijing, where I am now, I can easily buy many green teas. I got three identical tea pots (SAMA SAG-08) and three cheap green teas. I drink tea every morning. Instead of brewing one pot, I started making two or three pots at the same time and comparing the results. I compared different teas and the same tea brewed different lengths of time (Carl’s idea).

I’ve been doing this about two weeks. The results so far:

1. The cheapest tea became undrinkable. I decided to never buy it again and not to drink the rest of my purchase. I will use it for kombucha. Two of the three teas cost about twice the cheapest one. After a few side by side comparisons I liked the more expensive ones considerably more than the cheaper one. The two more expensive ones cost about the same but, weirdly, I liked the one that cost (slightly) more a little better than the one that cost less. (Tea is sold in bulk with no packaging or branding so the price I pay is closely related to what the grower was paid. The buyers taste it and decide what it’s worth.)

2. I decided to infuse the tea leaves only once. (Usual practice is to infuse green tea two or more times.) The quality of later infusions was too low, I decided. Before this, I had found second and later infusions had been acceptable.

The Willat Effect is working, in other words. After a decade of drinking tea, my practices suddenly changed. I will buy different teas and brew them differently. I will spend a lot more per cup since (a) each cup will require fresh tea, (b) I won’t buy the cheapest tea, and (c) I have become far more interested in green tea, partly because each cup tastes better, partly because I am curious if more expensive varieties taste better. When I bought the three varieties I have now I didn’t bother to learn their names; I identified them by price. In the future I will learn the names.

To get the Willat Effect, the things being compared must be quite similar. For example, comparing green tea with black tea does nothing. I have learned a methodological lesson: That tea is a great medium for studying this not only because it’s cheap but also because you can easily get similar tasting teas by brewing the same tea different lengths of time. I haven’t yet tried different water temperatures but that too might work.

I have done similar things before. I bought several versions of orange marmalade, did side-by-side tastings, and indeed became an orange marmalade connoisseur. After that I bought only expensive versions. After a few side-by-side comparisons of cheese that included expensive cheeses, I stopped buying cheap cheese. You could say I am still an orange marmalade and cheese connoisseur but this has no effect on my current life. Because I avoid sugar, I don’t eat orange marmalade. Because of all the butter I eat, I rarely eat cheese. My budding green tea connoisseurship, however, is making a difference because I drink tea every day.

20111014

What's the best way to escape the police in a high-speed car chase?

The following strategies may improve your odds:

- Try to elude the police in a district with a strict pursuit policy. Because of the danger of high-speed pursuits, many districts will call off high-speed chases almost instantly

- Going near the airport or going into tunnels may require helicopters chasing you to give up pursuit

- Cut across a field / go off-road -- cops may try to continue on the road and meet you on the other side. You may be able to escape before they make it around

- Pull ahead of the cops then turn off the road. Repeat with multiple turns and eventually stop and kill your lights.

Simple: Elude law enforcement in a jurisdiction with a strict pursuit policy. In my department, unless a suspect vehicle was an obvious DWI (swerving white line to white line, erratic speed changes) or had committed a violent felony, vehicle pursuits got cancelled by a commander almost instantly. There is so much liability at play in a pursuit situation that many departments are getting very conservative in their response protocols to situations like this.

As far as maneuvering tactics when they're actually pursuing you, there's really no sense diving in - you've got too many things going against you:

As far as maneuvering tactics when they're actually pursuing you, there's really no sense diving in - you've got too many things going against you:

- Communication. Every involved officer, as well as their supervisors and their supervisors' supervisors, have radios, both in-car and portable on their person. Can you dial your cell phone and drive with one hand at 120 mph while you coordinate with accomplices miles down the road? Probably not.

- Collaboration. If a pursuit has been sanctioned, the longer it goes on the more officers are going to be in on the hunt. And if you stray toward the boundary of a jurisdiction (city limit, county border, and so on), you're going to get mutual aid response from other agencies, who may be even less restricted than your original pursuers. Have fun with that.

- Convergence. You can go in one direction at a time, but law enforcement response to your location will be omnidirectional. You're going to have LEOs swarming your vehicle from 360 degrees and, if a helicopter gets tossed in the mix, three dimensions. You simply cannot go fast enough to counter this. Even if you were trying to elude the North Dakota Highway Patrol on I-94 in an Italian supercar, you've still got a lot of variables to buck regarding interagency cooperation.

- Contraptions. Hope you've got solid rubber tires, because if the agency gets a lock on your direction of flight, you're getting spiked - and if you hit spikes, you're hosed. You'll drive for a while, because spike strips are designed to puncture tires so they slowly deflate as opposed to blowing out. But once they're flat, driving on them will make them disintegrate; then you're driving on rims. Now you're limited to fifteen to twenty miles per hour, and you're in danger of your vehicle catching fire from the spraying sparks. Meanwhile, the agency is moving the K-9 unit to point position so when you shoulder your smoldering jalopy and make a run for it, Cujo's got less ground to cover before he eats your forearm. Did you think to wear chain mail?

- Concentration. How often do you drive in this manner? Unless you're running because you're on parole, this is likely your first dance. Sure, you've driven fast before - for a while. Then, for whatever reason, you got uncomfortable and backed off. Maybe your car made a sound you got concerned about, maybe you caught a glint you thought might be a trooper's windshield, maybe you thought you heard the faintest pulses of a siren. Whatever it was, it weakened your resolve and you slowed down. You have no such luxury here. And while this is fresh for you, this is, to many of the people pursuing you, another day another dollar. They've trained for this in training scenarios and have been involved in pursuits in the field. They run code multiple times a week. Even if one of your pursuers was a rookie who got eaten up by the stress, there will be a dozen vets to take his place.

- Cognizance. Unless you've lived around and driven on your path of flight for decades, I can almost guarantee you do not know it as well as your pursuers. I drove a hundred miles a night, four nights a week, on the same few dozen streets in my beat. I knew every pothole and curbstone, every back alley and shortcut. Plus, supplementing my knowledge was dispatch, who had a real time, God's eye view of the situation, and who could foreshadow upcoming turns based on officers' GPS and current road conditions.

- Conveyances. Your chosen city of flight may have the rattiest squad cars in the country, but they have the distinct benefit of redundancy. Your escape vehicle is precious, because there is only one. Nuke a tire from hitting spikes or a pothole, and you're roasted. If a patrol car has a blowout, that unit will fall out and be replaced by another. If you think your car can outrun and outlast what will effectively be an infinite number of responding unit vehicles (when you account for interagency involvement), have at it; otherwise, you may need to rethink your day.

- Control. What is your flight plan - are you going to rely on top end speed on the open highway? Or are you going to try to lose responding officers in an intricate series of turns? You've got a tall order ahead either way.

- First, if you've got something in your hands that can outperform Crown Victoria and Charger interceptors on the interstate, you're going to be relatively easy to spot - you won't be doing this in a stock Toyota. Second, you've got Little Brother to worry about - if you wax someone's doors at double the speed limit, they're probably going to call the police. Instant update to last known location and direction of travel, which allows retriangulation if you managed to create space. You probably haven't, though, because even with vehicles capable of impressive top end speed, there comes a point where the vehicle is so functionally light you can no longer safely operate it in real world driving conditions. My top speed running code in a Crown Vic was 134 mph, which was frankly stupid - the suspension was floating so badly that driving over a heads-up penny probably would have sent me into a terminal fishtail. This all means that, while you may maintain some semblance of distance between yourself and the point car, you're very unlikely to be completely leaving them in your sonic wake.

- Alternately, if you're banking on turns (you got me, pun intended), you're going to have to keep your head about you. Stress has a tendency to get the better of your attempts at rational thought. Was that three lefts or four? This looks familiar, I'd better go the other way...was that a school crossing sign or a dead end sign? Is Main Street continuous this far south? Which side of the tracks am I on? Ah, now we're cooking with - what? Since when is there a cul-de-sac here? Game over - whether that consists of walking backward at gunpoint or feeling Cujo getting his nom nom nom on.

20111003

The Coin Flip: A Fundamentally Unfair Proposition?

Have you ever flipped a coin as a way of deciding something with another person? The answer is probably yes. And you probably did so assuming you were getting a fair deal, because, as everybody knows, a coin is equally likely to show heads or tails after a single flip—unless it's been shaved or weighted or has a week-old smear of coffee on its underbelly.

So when your friend places a coin on his thumb and says "call it in the air", you realize that it doesn't really matter whether you pick heads or tails. Every person has a preference, of course—heads or tails might feel "luckier" to you—but logically the chances are equal.

Or are they?

Granted, everybody knows that newly-minted coins are born with tiny imperfections, minute deviations introduced by the fabrication process. Everybody knows that, over time, a coin will wear and tear, picking up scratches, dings, dents, bacteria, and finger-grease. And everybody knows that these imperfections can affect the physics of the coin flip, biasing the results by some infinitesimal amount which in practice we ignore.

But let's assume that's not the case.

Let's assume the coin is fabricated perfectly, down to the last vigintillionth of a yoctometer. And, since it's possible to train one's thumb to flip a coin such that it comes up heads or tails a huge percentage of the time, let's assume the person flipping the coin isn't a magician or a prestidigitator. In other words, let's assume both a perfect coin and an honest toss, such as the kind you might make with a friend to decide who pays for lunch.

In that case there's an absolute right and wrong answer to the age-old question...

The 50-50 proposition is actually more of a 51-49 proposition, if not worse. The sacred coin flip exhibits (at minimum) a whopping 1% bias, and possibly much more. 1% may not sound like a lot, but it's more than the typical casino edge in a game of blackjack or slots. What's more, you can take advantage of this little-known fact to give yourself an edge in all future coin-flip battles.

Suffice to say their approach involved a lot of physics, a lot of math, motion-capture cameras, random experimentation, and an automated "coin-flipper" device capable of flipping a coin and producing Heads 100% of the time.

Here are the broad strokes of their research:

A good way of thinking about this is by looking at the ratio of odd numbers to even numbers when you start counting from 1.

So when your friend places a coin on his thumb and says "call it in the air", you realize that it doesn't really matter whether you pick heads or tails. Every person has a preference, of course—heads or tails might feel "luckier" to you—but logically the chances are equal.

Or are they?

Granted, everybody knows that newly-minted coins are born with tiny imperfections, minute deviations introduced by the fabrication process. Everybody knows that, over time, a coin will wear and tear, picking up scratches, dings, dents, bacteria, and finger-grease. And everybody knows that these imperfections can affect the physics of the coin flip, biasing the results by some infinitesimal amount which in practice we ignore.

But let's assume that's not the case.

Let's assume the coin is fabricated perfectly, down to the last vigintillionth of a yoctometer. And, since it's possible to train one's thumb to flip a coin such that it comes up heads or tails a huge percentage of the time, let's assume the person flipping the coin isn't a magician or a prestidigitator. In other words, let's assume both a perfect coin and an honest toss, such as the kind you might make with a friend to decide who pays for lunch.

In that case there's an absolute right and wrong answer to the age-old question...

Heads or tails?...because the two outcomes of a typical coin flip are not equally likely.

The 50-50 proposition is actually more of a 51-49 proposition, if not worse. The sacred coin flip exhibits (at minimum) a whopping 1% bias, and possibly much more. 1% may not sound like a lot, but it's more than the typical casino edge in a game of blackjack or slots. What's more, you can take advantage of this little-known fact to give yourself an edge in all future coin-flip battles.

The Physics of Coin Flipping

In the 31-page Dynamical Bias in the Coin Toss, Persi Diaconis, Susan Holmes, and Richard Montgomery lay out the theory and practice of coin-flipping to a degree that's just, well, downright intimidating.

Suffice to say their approach involved a lot of physics, a lot of math, motion-capture cameras, random experimentation, and an automated "coin-flipper" device capable of flipping a coin and producing Heads 100% of the time.

Here are the broad strokes of their research:

- If the coin is tossed and caught, it has about a 51% chance of landing on the same face it was launched. (If it starts out as heads, there's a 51% chance it will end as heads).

- If the coin is spun, rather than tossed, it can have a much-larger-than-50% chance of ending with the heavier side down. Spun coins can exhibit "huge bias" (some spun coins will fall tails-up 80% of the time).

- If the coin is tossed and allowed to clatter to the floor, this probably adds randomness.

- If the coin is tossed and allowed to clatter to the floor where it spins, as will sometimes happen, the above spinning bias probably comes into play.

- A coin will land on its edge around 1 in 6000 throws, creating a flipistic singularity.

- The same initial coin-flipping conditions produce the same coin flip result. That is, there's a certain amount of determinism to the coin flip.

- A more robust coin toss (more revolutions) decreases the bias.

A good way of thinking about this is by looking at the ratio of odd numbers to even numbers when you start counting from 1.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17No matter how long you count, you'll find that at any given point, one of two things will be true:

- You've touched more odd numbers than even numbers

- You've touched an equal amount of odd numbers and even numbers

- You've touched more even numbers than odd numbers.

H T H T H T H T H T H T H T H T H T H T H T H T HAt any given point in time, either the coin will have spent equal time in the Heads and Tails states, or it will have spent more time in the Heads state. In the aggregate, it's slightly more likely that the coin shows Heads at a given point in time—including whatever time the coin is caught. And vice-versa if you start the coin-flip from the Tails position.

The Strategy of Coin Flipping

Unlike the article on the edge in a game of blackjack mentioned previously, I've never seen a description of "coin flipping strategy" which takes the above science into count. When it's a true 50-50 toss, there is no strategy. But if we take it as granted, or at least possible, that a coin flip does indeed exhibit a 1% or more bias, then the following rules of thumb might apply.- Always be the chooser, if possible. This allows you to leverage Premise 1 or Premise 2 for those extra percentage points.

- Always be the tosser, if you can. This protects you from virtuoso coin-flippers who are able to leverage Premise 6 to produce a desired outcome. It also protects you against the added randomness (read: fairness) introduced by flippers who will occasionally, without rhyme or reason, invert the coin in their palm before revealing. Tricksy Hobbitses.

- Don't allow the same person to both toss and choose. Unless, of course, that person is you.

- If the coin is being tossed, and you're the chooser, always choose the side that's initially face down. According to Premise 1, you'd always choose the side that's initially face up, but most people, upon flipping a coin, will invert it into their other palm before revealing. Hence, you choose the opposite side, but you get the same 1% advantage. Of course, if you happen to know that a particular flipper doesn't do this, use your better judgment.

- If you are the tosser but not the chooser, sometimes invert the coin into your other palm after catching, and sometimes don't. This protects you against people who follow Rule 4 blindly by assuming you'll either invert the coin or you won't.

- If the coin is being spun rather than tossed, always choose whichever side is lightest. On a typical coin, the "Heads" side of the coin will have more "stuff" engraved on it, causing Tails to show up more frequently than it should. Choosing Tails in this situation is usually the power play.

- Never under any circumstances agree to a coin spin if you're not the chooser. This opens you up to a devastating attack if your opponent is aware of Premise 2.

20110813

Commons in a taxonomy of goods

Commons are common pool resources. Commons are common goods. Commons are social relationships. You can find all of these descriptions for the term. Which is the correct one? All three versions are valid—at the same time!

The word „common“ is the best starting point for the analysis. The common thing within a commons are the resources, which are used and cared for, are the goods resulting from joint activities, and are the social relationships emerging from acting together. These three aspects are so different for all commons, that no one could describe them in a reasonably complete manner.

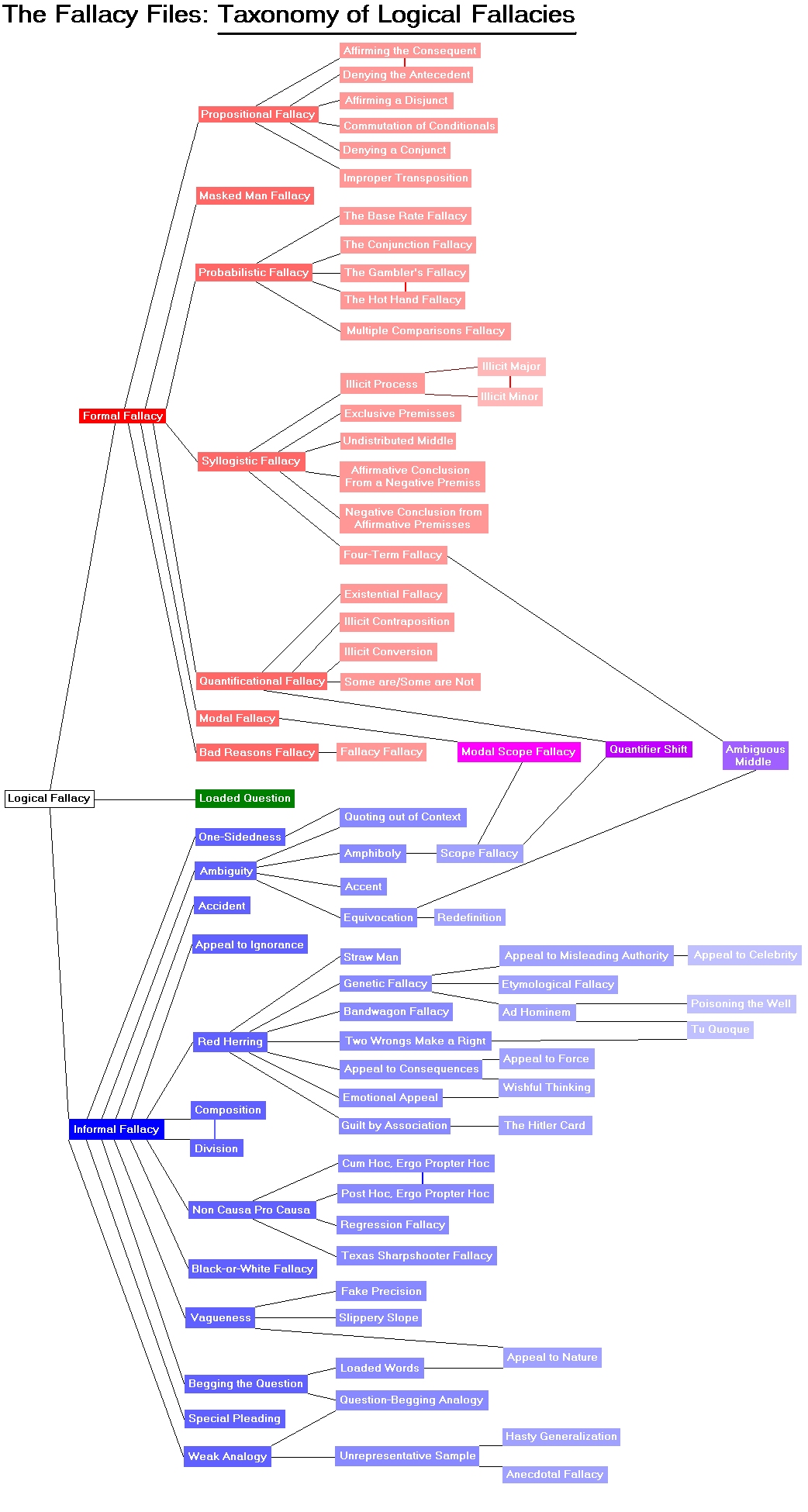

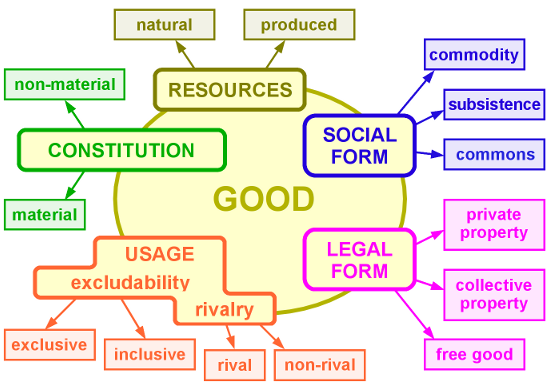

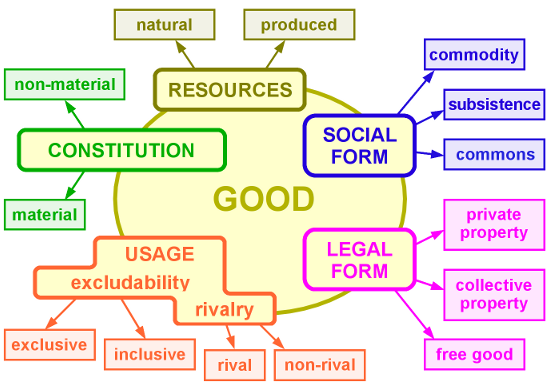

Commons are at odds with commodities, although a commodity is a good which is produced in a specific social form using resources. But it is usual that traditional economics only consider resources as social forms of production in a marginal way or even not in any way. I will try to overcome this limitation by using the following taxonomy of goods [Illustration 1]. I decide to put the concept of „good“ into the center, while describing from the triple definition explained above: as a common good, as a resource and as a social form.

Illustration 1: Proposed taxonomy of „goods“

In the adjoining illustration a good is designated by five dimensions. Beside the already mentioned dimensions resource and social form, there are constitution, usage and legal form. They will be presented in the following paragraphs of this document. After that I will emphasize the characteristics of commons once again.

Constitution

The constitution describes the type materiality of a good. We can found two types: material and non-material goods.

Material goods have a physical shape, they can be used up or crushed out. Purpose and physical constitution are linked with each other, material goods perform their purpose only by their physical constitution. If the physical constitution gets dismantled the purpose also gets lost.

On the other hand non-material goods are completely decoupled from a specific physical shape. This contains services defined by a coincidence of production and consumption as well as preservable non-material goods. In fact, a service often leads to a material result (haircut, draft text etc.), but the service itself finishes by establishing the product, i.e. it has been consumed. Now the result is falling into a material good category.

Preservable non-material goods need a physical carrier. Having non-digital („analog“) goods the bonding of the good to a specific material constitution of the carrier can yet be tight (e.g. the analog piece of music on the audiotape or disk record), while digital goods are largely independent from the carrier medium (e.g. the digital piece of music on an arbitrary digital medium).

Usage

The usage has got two sub-dimensions: excludability and rivalry. They grasp aspects of access and concurrent utilization.

A good can only be used exclusively, if the access to the good is generally prevented and selectively allowed (e.g. if a „bagel“ is bought). It can be used inclusively, thus non-exclusively, if the access is possible for all people (e.g. Wikipedia). The usage of a good is rival or rivalrous, if using the good by one person restricts or prevents use options for other people (e.g. listening to music by earphones). A usage is non-rival, if this does not result in limitations for others (e.g. a physical formula).

The usage scheme is used by classical economists as the authoritative charateristic for goods. But it is far too narrow-minded. It combines two aspects which in fact occur together with usage while the causes are completely different. The exclusion is a result of an explicit activity of excluding people, thus closely linked with the social form. On the other hand, the rivalry is closely linked with the constitution of the good—indeed, an apple can only be eaten once, for the next consumption a new apple is needed.

Resources

The production of goods requires resources. Though sometimes nothing is produced, already existing resources are used and maintained. In this case the resource itself is the good, which is considered to be preserved—for instance a lake. We can usually find some mixed case , because no produced good can go without the resource of knowledge which has been created and disposed by others. By resources, we generally understand non humans sources .

In the illustration, natural and produced resources are distinguished. Natural resources are already existing and raw resources which, however, are seldom found in uninfluenced environments. Produced resources are material or non-material created preconditions for further use in the production of goods or resources in the broadest sense.

Social form

The social form describes the way of (re-)production and the relations that humans commit to each other when doing so. Three social forms of (re-)production have to be distinguished: commodity, subsistence, and commons.

A good becomes a commodity, if it is produced in a general way for the exchange (selling) on markets. Exchanging has to occur because, in capitalism, production is a private activity and each producer produce separated from the others and all are ruled by competition and profit searching. The measure of exchange is the value, which is the average socially necessary abstract labor being required to produce the commodity in certain historical moment. The medium of exchange is money. The measure of usage is the use value being the „other side“ of the (exchange) value. Thus, a commodity is a social form, it is the indirect exchange-mediated way of how goods obtain general societal validity. Preconditions are scarcity and exclusion from the access of the commodity, because otherwise exchange will not happen.

A good maintains the form of subsistence, if it is not produced in a general way for others, but only for personal use or benefit of personally known others (family, friends etc.). Here, exchange does not occur or only for exceptional cases, but the good is relayed, taken, and given—following any immediately agreed social rule. A transition form to commodity is barter, the direct non-money mediated exchange of goods.

A good becomes a commons, if it is generally produced or maintained for others. The good is not exchanged and the usage is generally bound to fixed socially agreed rules. It is produced or maintained for general others insofar as it neither has be personal-determined others (like with subsistence) nor exclusively abstract others with no further relationship to them (like with commodity), but concrete communities agreeing on rules of usage and

maintenance of the commons.

Legal form

The legal form shows the possible juridical codes which a good can be subjected to: private property, collective property, and free good. Legal arrangements are necessary under the conditions of societal mediation of partial interests, they form a regulating framework of social interaction. As soon as general interests are part of the way of (re-)production itself, legal forms can step back in favor of concrete socially agreed rules as it is the case within the commons.

Private property is a legal form, which defines the act of disposal of an owner over a thing with exclusive control over the property. The property abstracts from the constitution of the thing as well as from the concrete possession. Private property can be merchandise, can be sold or commercialized.

Collective property is collectively owned private property or private property for collective purposes. Among them, there are common property and public (state) property. All designations of private property are basically valid here. There are various forms of collective property, for instance stock corporation, house owner community, nationally-owned enterprise.

Free goods (also: Res nullius, Terra nullius or no man’s land) are legally or socially unregulated goods under free access. The often cited „Tragedy of Commons“ is a tragedy of no man’s land, which is overly used or destroyed due to missing rules of usage. Such no man’s lands do exist yet today, e.g. in high-sea or deep-sea.

Commons—jointly creating the life

Peter Linebaugh puts the inseparable connection of good and social activity into one sentence: „There is no commons without commoning“—commons can not exist without a respective social practice of a community. The size of the community is therefore not fixed. It considerably depends on the re-/produced resource. The re-/production of a local wood will presumably be taken over by a local community, while the preservation of the world climate certainly needs the constitution of a global community. In that case the state can supersede the community role by fiduciary taking over the re-/production of the resource. But this is not the sole possible option.

The size of the community as well as the rules depend on the character of the resource. For a threatened wooded area it is reasonable to agree upon more restrictive rules of use than for a resource which can easily be copied. Free software, for instance, can be unhesitatingly determined to be available under a free access regime, thus a social rule of use which explicitly does not exclude anybody.

The „freedom“ of plundering and exploitation, which commonly occurs under the regime of separated private production of goods as commodities, does find its limitation at the freedom of others to use the resource. Especially by preventing random plundering of a used-up resource, the needs of general others who currently do not use the resource, are included. The community being connected very closely to the resource is only appointed to produce and reproduce the resource in a way that is generally useful. It is their „task“ to pass over the resource to further generations in an improved manner. However, there is no guarantee that the destruction of the resource will happen anyway. The history of capitalism is also a history of violent destruction and privatization of the commons.

Within the commons, production and reproduction can hardly be separated. The production serves their reproduction at the same time. In case of used-up resources, rules of usage make sure that the resource can regenerate itself, or in case of copyable digital goods, that the social network producing the resource is maintained. However, it has to be distinguished between a common pool resource as such and goods which are produced on the basis of a resource. Produced goods can become commodities if they are sold on markets. It is the goal of socially agreed rules of use within the community to limit the use of the resource and to prevent that it is overly used and gets finally destroyed.

There have always been commons in human history. However, its historical role and function has changed dramatically. In former times commons had been a general fundament of human livelihood, while with the uprising of class societies they have been integrated into different regimes of exploitation. Capitalism is a climax of exploiting general human living conditions, which—carried by an abstract notion of freedom—is not able to guarantee survival of the human species. This is due to the fact, that common interests are not part of the way of production but have to be additionally coined onto the blind acting of partial interests via law and state. Therefore, it is necessary to aim at a new socially regulated way of production, where common interests are part of the way of production itself.

Moreover, capitalism has cut off essential moments of production from societal life and banned it into a sphere of reproduction. Production as „economy“ and reproduction as „private life“ have been separated. Private production is structurally blind and only mediated afterward. Therefore it could only expand at the expense of subsistence and commons production which in turn are needed to compensate the (physical and psychic) consequences of „economy“. Private production has always pointed to a complementing subsistence and commons production, it permanently takes from the sphere of commons without giving anything back.

The Commons has the potential to replace the commodity as the determining form of re-/producing societal living conditions. Such a replacement can only occur, if communities constitute themselves for every aspect of life, in order to take „their“ commons back and to reintegrate them into a new need-focused logic of re-/production.

The word „common“ is the best starting point for the analysis. The common thing within a commons are the resources, which are used and cared for, are the goods resulting from joint activities, and are the social relationships emerging from acting together. These three aspects are so different for all commons, that no one could describe them in a reasonably complete manner.

Commons are at odds with commodities, although a commodity is a good which is produced in a specific social form using resources. But it is usual that traditional economics only consider resources as social forms of production in a marginal way or even not in any way. I will try to overcome this limitation by using the following taxonomy of goods [Illustration 1]. I decide to put the concept of „good“ into the center, while describing from the triple definition explained above: as a common good, as a resource and as a social form.

Illustration 1: Proposed taxonomy of „goods“

In the adjoining illustration a good is designated by five dimensions. Beside the already mentioned dimensions resource and social form, there are constitution, usage and legal form. They will be presented in the following paragraphs of this document. After that I will emphasize the characteristics of commons once again.

Constitution

The constitution describes the type materiality of a good. We can found two types: material and non-material goods.

Material goods have a physical shape, they can be used up or crushed out. Purpose and physical constitution are linked with each other, material goods perform their purpose only by their physical constitution. If the physical constitution gets dismantled the purpose also gets lost.

On the other hand non-material goods are completely decoupled from a specific physical shape. This contains services defined by a coincidence of production and consumption as well as preservable non-material goods. In fact, a service often leads to a material result (haircut, draft text etc.), but the service itself finishes by establishing the product, i.e. it has been consumed. Now the result is falling into a material good category.

Preservable non-material goods need a physical carrier. Having non-digital („analog“) goods the bonding of the good to a specific material constitution of the carrier can yet be tight (e.g. the analog piece of music on the audiotape or disk record), while digital goods are largely independent from the carrier medium (e.g. the digital piece of music on an arbitrary digital medium).

Usage

The usage has got two sub-dimensions: excludability and rivalry. They grasp aspects of access and concurrent utilization.

A good can only be used exclusively, if the access to the good is generally prevented and selectively allowed (e.g. if a „bagel“ is bought). It can be used inclusively, thus non-exclusively, if the access is possible for all people (e.g. Wikipedia). The usage of a good is rival or rivalrous, if using the good by one person restricts or prevents use options for other people (e.g. listening to music by earphones). A usage is non-rival, if this does not result in limitations for others (e.g. a physical formula).

The usage scheme is used by classical economists as the authoritative charateristic for goods. But it is far too narrow-minded. It combines two aspects which in fact occur together with usage while the causes are completely different. The exclusion is a result of an explicit activity of excluding people, thus closely linked with the social form. On the other hand, the rivalry is closely linked with the constitution of the good—indeed, an apple can only be eaten once, for the next consumption a new apple is needed.

Resources

The production of goods requires resources. Though sometimes nothing is produced, already existing resources are used and maintained. In this case the resource itself is the good, which is considered to be preserved—for instance a lake. We can usually find some mixed case , because no produced good can go without the resource of knowledge which has been created and disposed by others. By resources, we generally understand non humans sources .

In the illustration, natural and produced resources are distinguished. Natural resources are already existing and raw resources which, however, are seldom found in uninfluenced environments. Produced resources are material or non-material created preconditions for further use in the production of goods or resources in the broadest sense.

Social form